As more hospitals turn to big data techniques to address problems, the healthcare sector has reached a turning point in the evolution of treatment. The underlying notion of these concepts is to ignore the uncertainty of relapses in patient health statistics. One can now witness the beneficial effects that big data can have on making judgments in crucial circumstances thanks to the development of newer and more sophisticated ways of collecting data on illness parameters and demographic studies.

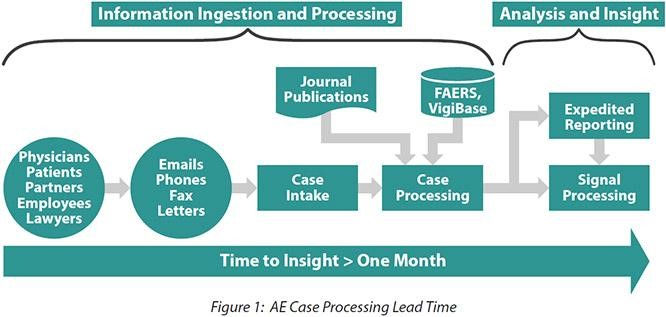

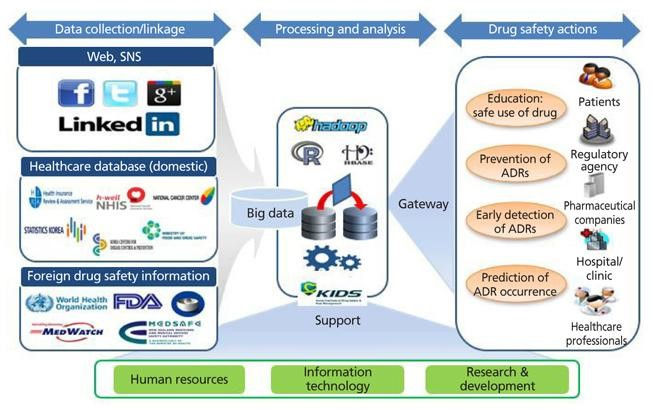

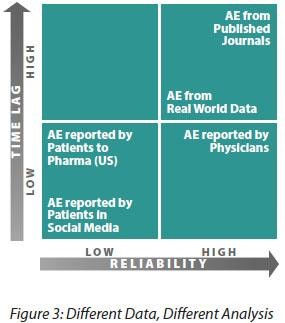

By examining consumption patterns in representative populations, the field of pharmacological studies known as pharmacovigilance seeks to reduce the effects and reactions of medication consumption. Big data has emerged as a preferred method for navigating clinical repositories because of the enormous amounts of data—both numerical and contextual—that are created with each trial.

History Of Big Data In Healthcare

The 1950s and 1960s saw the evolution of computers from their enormous sizes to increasingly more complex settings in research centres. Jon Von Neuman imagined computers like the ENIVAC and UNIVAC as the standard tool for calculations and cutting-edge military projects. But once the contemporary computer emerged, the idea of using large data as a tool for model development changed.

The storage and collection techniques improved with the development of smaller, quicker devices, paving the way for a new wave of problems that could be solved with higher accuracy.

With the introduction of analysis software like STATA, the story of big data entering the fields of healthcare and pharmacovigilance begins. These were mainly used to research the onset and potential for diseases in patient batches. What will eventually be "real world data" was captured with the use of tabular and comma-separated value production. This is a reference to the information produced from patient administrative histories and electronic records. Regarding the longitudinal component of centricity and accuracy, both offer benefits and drawbacks.

Big data was used to provide reliable and rational models that were applied in studies for individuals suffering from diseases like cancer, heart disease, and even minor illnesses.

In the early days of big data, there were many different types of data available to scientists. Some were collected directly from patients, while others came from other sources like weather stations or satellites. Scientists could then combine all of these data together to create models that would help them understand what caused diseases and how to treat them. These models could then be applied to future patients to see if they had similar symptoms and if they responded well to certain treatments.

The Role Of Real World Data

Published methods such as the Monte Carlo Method and convex optimization helped in paving the way for secure new compounds that made lives less complicated everywhere. But it wasn’t an easy journey to attain this point of the cycle. Real-world information has played a large role in supporting analysts to derive new ways of searching at how illnesses and patients interplay to a greater homogenized framework of network models.

Real-world data, in easier terms, is uncooked records that are acquired by assessments and analysis of sufferers in actual life settings. The focal point right here is to cast off the uncertainty that black box models stop up incorporating inselection-making, by means of having crisp outcomes to work on. Real-world data is tough to achieve and collect, given the multilateral nature in which the records is linked to fitness information such as blood pressure, ECG levels, lung potential and trauma. In the context of big data modelling for fitness centres, actual world data is beneficial for creating a contextual picture of the patient without sacrificing smaller nuances.

Real-world data comprise the records of a patient’s hospital stay and everything observed from that data. They can also be information from registries or clinical trials that the patient is undergoing for treatment. Real-world data includes any data that is generated during a patient’s admission to the hospital and is used as the first resource when building predictive models. This data can also come from registries and pharmaceutical trials that the patient is undergoing while in operations. Real-world data consists of information collected by patients and healthcare providers, such as medical records, lab tests and images, healthcare claims, surveys, and insurance information. It can be powerful when used in the right way. According to Health Research Funding, real-world data is “manifested in the patient’s records” and serves as a source of information for “predictive models” that can help physicians make better diagnoses. In the real world, data generated by a patient’s records while they were in the hospital can act as the foundational resource for any predictive models. This data can also come from registries and pharmaceutical trials, which can inform how the patient was

What Is Decision Relevant Evidence?

Picture the typical critic terrain. Swathes of data lines and depositories littered across the waiters with validations of cases spraying each over the bottom. It’s a fact infrequently queried that big data is a long and laborious process that doesn’t just stop with creating accurate models. The idea behind any typical big data frame is to make a model that can stand the test of new records, anyhow of variations and impulses. In the medical and health fields, similar problems are magnified to large extents due to the dissociation between the parameters that the machines measure and what they tell judges. For illustration, clinical trials where control subjects show certain medicine goods over those that don’t could be snappily allowed to be told by the medicines. risks and fallacies similar to mistaking correlation for the occasion are common.

Comments